A good way to learn something is to try to explain it to somebody else. This is the essence of the Feynman method of learning [5]. I believe Feynman suggested that the “somebody” should be a six-year old.

I have in the same vein found that turning thoughts into text (and LaTeX-formulas) and then reading it back to see if it makes sense is a useful tool for structuring thoughts and ideas [6]. I therefore from time to time put tentative insights into writings in this blog for myself and the world to review.

What clearly you cannot say, you do not know;

Esaias Tegnér. Epilogue at an academic graduation in Lund, 1820. Unknown translator.

with thought the word is born on lips of man;

what’s dimly said is dimly thought.

Learning is an iterative process so I take the liberty to revise earlier posts as I learn more. Hopefully they will eventually coalesce into something reasonably consistent.

Active inference framework

I have set out to try to understand the active inference framework (AIF) that is claimed to provide a unified model for human and animal perception, learning, decision making, and action [2].

The theory of active inference was first proposed by Karl Friston based on ideas that go back to the 1900:th century.

AIF has connections to several other topics that I’m interested in. There seem to be some interesting and potentially important associations between AIF and machine learning. AIF can possibly contribute to the understanding of the mental health conditions that affect many people today, especially the young. AIF is perhaps also, as Anil Seth claims in his book Being You [1], a waypoint on the road to understand the greatest of mysteries, consciousness.

The central claim by Karl Friston [2] is that AIF explains both perception and action planning by biological organisms. I will introduce the framework with a simple example in this post before moving on to the mathematics that can be quite dense.

Key ideas

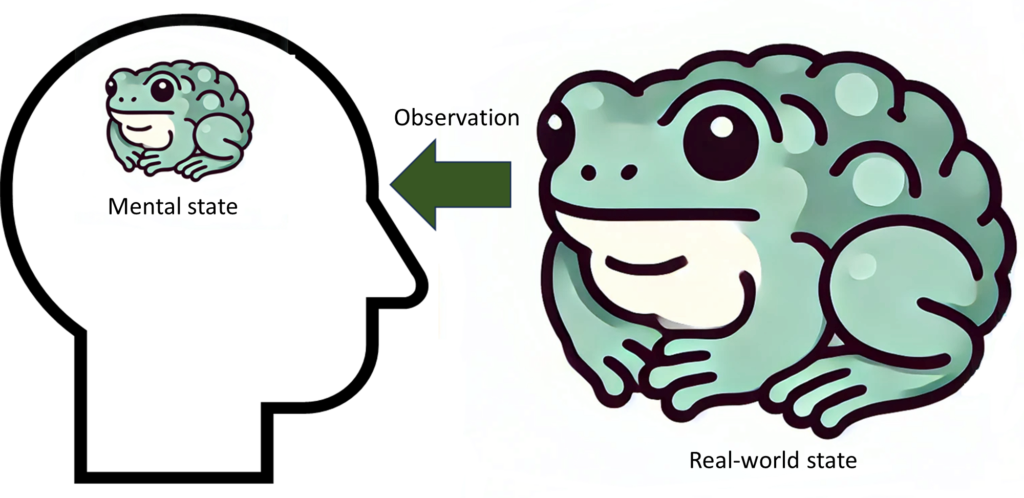

Organisms such as humans make sense of the real world by observing it and creating mental states that are their best guesses of some real-world states. The mental states constitute the organism’s knowledge about the real world. An organism never has direct, unlimited access to the true real-world states, only observations of a limited set of characteristics of those states. The mapping from real-world states to mental states has been honed by the evolution and further enhanced by individual learning to be maximally useful but not necessarily maximally realistic.

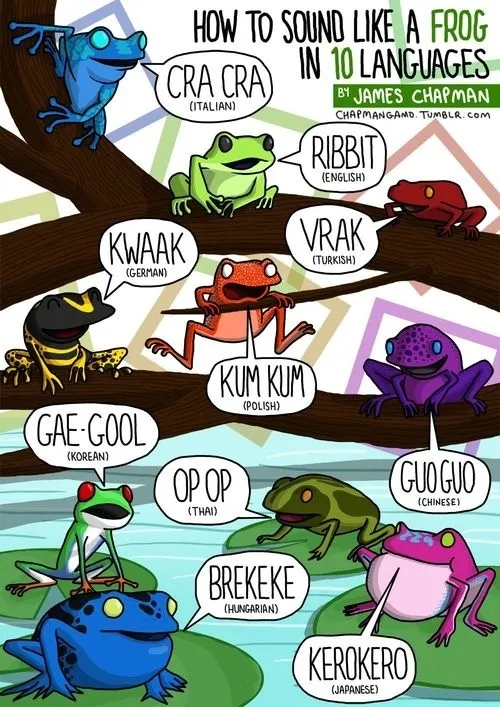

In the illustration below an organism (in this case a human) observes a frog, mostly visually, but perhaps also auditorially (I was shocked to learn that English speaking frogs say “ribbit” – what?). Simply put, the organism “browses” through a number of learned mental states representing different real-world objects and phenomena and generates the most likely observation for each mental state. If the generated observation is similar to the actual observation, then the corresponding mental state is assigned a high probability. If one mental state, say the one that represents a frog, gets a high enough probability, then the organism says (if it is a human) that it believes it observes a frog. More on this below.

A mental state can also represent the state of the organism’s own body. The mental state of fear can for instance be inferred from observations of vital parameters like increased attention, increased heart rate, and higher level of adrenaline. The idea is that these vital parameters don’t change because we feel fear; we feel fear because the vital parameters change; the observations change [1].

The inference of a likely mental state by generating and matching observations is performed using a generative model 1 that describes the probabilistic relationship between the observations the organism makes and corresponding mental states (representing real-world states). The generative model is defined as the joint probability distribution \(p(s, o)\) where \(o\) denotes an observation and \(s\) a mental state.

The real-world process generating the observations is called the generative process. The generative model is a model of the real-world generative process but they are not the same.

The generative model can according to basic probability theory be expressed as a product of two probability distributions, priors and likelihoods like this \(p(s, o) = p(o \mid s)p(s)\).

Priors, \(p(s)\), represent the probabilities of the organism’s mental states prior to an observation, its expected mental states. If it was raining five minutes ago, then the expected current mental state is also rain.

Likelihoods, \(p(o \mid s)\), represent the probabilities of observations given a mental state \(s\). \(p(o \mid s)\) models the organism’s sensory system. It yields the probability of the actual observation for the given mental state. A high probability means that the mental state is likely.

As introduced above, the inference process is often described in terms of minimization of the discrepancy between the expected observation given a hypothetical mental state and the actual observation. When the two observations match, we have the best matching mental state. To be able to do this comparison, the brain would need to use \(p(s, o)\) to generate the expected observation and at some level in the brain compare it to the incoming actual observation. An alternative discrepancy measure is the probability of the actual observation given the hypothetical mental state, \(p(o \mid s)\). The higher the probability, the lower the discrepancy. Looking up the probability from \(p(o \mid s)\) there would be no need to generate observations. The brain may combine both methods. More on this in the next post.

Mental states are not a fool-proof way to know something about the real world. We see the world in a way that facilitates survival and procreation. We have a “user interface” to the world in Donald Hoffman’s words [7]. An exampel of such user interface feature is our subjective experience of colors. Colors don’t exist in the real world but they are useful to us. Another example is that of matter. Matter in our intuitive sense doesn’t exist. At the bottom we only find quantum fields and forces and empty space.

We don’t have mental states that match all real-world states. Most people for instance don’t have a useful mental state corresponding to a black hole.

On the other hand we have mental states that have no objective real-world existence. Such mental states are those of a nation or of money. Money mostly exist as bits on some computer hard drive. Nations are equally hard to find in nature.

Some organisms update their generative models over time by learning from experience. The generative models of simple organisms are rather hard-coded.

Action inference

In addition to expected mental states and expected observations, the organism also maintains probability distributions of desired mental states and desired observations 2 . This means that at least complex organisms have not one but (at least) two generative models: one containing expectations and one containing desires. (A bacterium may not have desires that are different from its expectations so it may manage with one model.)

The organism evaluates the valence of its current situation based on how close the actual mental state or observation is to a desired one. It also selects actions to increase the probability of such desired mental states or observations in the future. We call this process action inference. Action inference will be a topic of future posts.

A desired mental state held by a fish is to be immersed in water of the right temperature and salinity. Humans have many levels of desired mental states and observations from the right body temperature to a meaningful life.

Active inference presents a shift from the traditional view that an organism passively receives inputs through the senses, processes these inputs, and then decides on an action. Instead, in the AIF, the organism continuously generates predictions about what it expects to observe now and what it desires to observe in the future. When there’s a mismatch between the observations predicted by the generative model and the actual observations, a prediction error arises. The organism tries continuously to minimize the prediction error, either by updating its mental state or by taking actions to make the world more in line with its desired mental states.

Whether to observe or to act depends on the context. During food foraging, there is a strong emphasis on action to reach the desired mental state (to be satiated). In contrast, while watching a movie, the emphasis might lean more towards passive observation continuously adjusting one’s mental states according to what plays out on the screen (having hopefully before that reached the desired mental state that one is enjoying the movie).

A simple example

Let’s look at how a human actor, according to AIF, could optimally infer knowledge about the state of the real world based on its observation in an extremely simple scenario.

We assume that the actor has seen frogs and lizards before so they have a fairly accurate one-to-one mapping from the real-world amphibians and reptiles to corresponding mental states. We further assume that there are only two kinds of animals in a garden, frogs and lizards. Frogs are prone to jumping quite often. The lizards also jump but more seldom. We also assume that the actor has forgotten their eyeglasses in the house so that they can’t really tell a frog from a lizard just by looking at it but they can discern whether it jumps or crawls (yes, this is a contrived scenario getting more contrived but please bear with me).

The actor knows based on a census that there are more lizards than frogs in the garden. This knowledge, when quantified, is the prior. The actor also knows based on some experience that frogs jump much more often than lizards do. This knowledge, also when quantified, is called likelihood. The prior and the likelihood constitute the actor’s generative model.

The actor now observes a random animal for two minutes. They don’t see what animal it is because of their poor eyesight but they can see that the animal jumps. What mental state does the actor arrive at, frog or lizard?

Nothing is certain

We cannot be certain about much in this world (except perhaps death and taxes). In the context of active inference there is uncertainty about the accuracy of the observation (aleatoric uncertainty) and about the accuracy of the brain’s generative model’s accuracy (epistemic uncertainty).

When I suggested to Bard, the AI, that when I see a cat on the street I’m pretty sure it’s a cat and not a gorilla it replied “it is possible that the cat we see on the street is actually a very large cat, or that it is a gorilla that has been dressed up as a cat”. So take it from Bard, nothing is certain.

When we are uncertain, we need to describe the world in terms of probabilities and probability distributions. We will say things like “the animal is a frog with 73% probability” (and therefore, in a garden with only two species, a lizard with 27 % probability). Bayes’ theorem and AIF are all about probabilities, not certainties.

Notation

When I don’t understand something mathematical or technical, it is frequently due to confusing or unfamiliar notation or unclear ontology. (The other times it is down to cognitive limitations I guess.) Before I continue, I will therefore introduce some notation that I hope I can stick to in this and future posts. I try to use as commonly accepted notation as possible but unfortunately there is no standard notation in mathematics.

Active inference is about observations, mental states, and probability distributions (probability mass functions and probability density functions). Observations and states can be discrete or continuous. Observations that can be enumerated or named, like \(\text{jumps}\), are discrete. Observations that can be measured, like the body temperature, are continuous.

Discrete observations and beliefs are events in the general vocabulary of probability theory. In this post we only consider discrete observations and mental states.

Potential observations are represented by a vector of events:

$$\pmb{\mathcal{O}} = [\mathcal{O}_1, \mathcal{O}_2, \ldots, \mathcal{O}_n] = [\texttt{jumps}, \texttt{crawls}, \ldots]$$

\(\pmb{\mathcal{O}}\) is a vector of potential observations. It doesn’t by itself say anything about what observations have been made or are likely to be made. We therefore also need to a assign a probability \(P\) to each observations, in this case \(P(\texttt{jumps})\) and \(P(\texttt{crawls})\) respectively. We collect these probabilities into a vector

$$\pmb{\omega} = [\omega_1, \omega_2, \ldots, \omega_n] = [P(\texttt{jumps}), P(\texttt{crawls}), \ldots]$$

The vector of observations \(\pmb{\mathcal{O}}\) and the vector of probabilities \(\pmb{\omega}\) are assumed to be matched element-wise so that \(P(\mathcal{O}_i) = \omega_i\)

For formal mathematical treatment, all events need to be mapped to random variables, real numbers representing the different events. This is a technicality but will make things more mathematically consistent.

In our case \(\texttt{jumps}\) could for instance be mapped to \(0\) and \(\texttt{crawls}\) to \(1\) (the mapping is arbitrary for discrete events without an order). In general we do the mapping \(\pmb{\mathcal{X}} \mapsto \pmb{X}\), where \(\pmb{\mathcal{X}}\) is an event and \(\pmb{X} \in \mathbb{R}\).

The observation events are analogously mapped to observation random variables: \(\pmb{\mathcal{O}} \mapsto \pmb{O}\). When referring to a certain value of a random variable such as an observation, we use lower case letters, e.g., \(P(O = o)\).

Continuous observations such as body temperature and mental states can be represented by random variables directly; there is no need for the event concept.

A probability mass function [3], \(p(x)\) is a function that returns the probability of a random variable value \(x\) such that \(p(x) = P(X = x)\). It is often possible to define a probability mass function analytically which makes using random variables in some ways easier than bare-bones events for which probabilities need to be explicitly defined for each event. Also, continuous random variables, like the body temperature, don’t have any meaningful representation in the event space.

Note that a probability is denoted with a capital \(P\) while a probability mass function (probability distribution) is denoted with a lower case \(p\).

Since our observations are discrete, the probability mass function for the observations that we are interested in would be:

$$p(o) = \text{Cat}(o, \pmb{\omega)}$$

This is called a categorical probability mass function and is a basically a “lookup table” of probabilities such that \(p(o_i) = P(O = o_i) = \omega_i\). The interesting aspect of a categorical probability mass function is the vector of probabilities. In practical situations it is often useful to reason about the probabilities directly and to “forget” that we pull the probabilities out from a probability mass function. Read on to see what I mean.

Discrete mental states can also be seen as events:

$$\pmb{\mathcal{S}} = [\mathcal{S}_1, \mathcal{S}_2, \ldots, \mathcal{S}_n] = [\texttt{frog}, \texttt{lizard}, \ldots]$$

The probabilitu of each mental state is represented by the vector:

$$\pmb{\sigma} = [\sigma_1, \sigma_2, \ldots, \sigma_n] = [P(\texttt{frog}), P(\texttt{lizard}), \ldots]$$.

The mental state events are mapped to random variables: \(\pmb{\mathcal{S}} \mapsto \pmb{S}\)

The probability distribution of the mental state random variable is:

$$p(s) = \text{Cat}(s, \pmb{\sigma)}$$

Back to frogs and lizards

Assume that the prior probabilities held by the actor for finding frogs and lizards in the garden are:

$$\pmb{\sigma} = [0.25, 0.75]$$

This means that before an observation is made, the actor assigns a \(75\%\) probability to the mental state \(\texttt{lizard}\) and a \(25\%\) probability to the mental state \(\texttt{frog}\).

Assume that the likelihood for each of the species in the garden jumping within two minutes is represented by the vector:

$$[P(\texttt{jumps} \mid \texttt{frog}), P(\texttt{jumps} \mid \texttt{lizard})] = [0.8, 0.1]$$

\(P(\texttt{jumps} \mid \texttt{frog})\) should be read as “the probability for observing jumping given that the animal is a frog”.

This means that frogs are eight times more likely to jump within a two minute period than lizards. In other words: if there would be as many frogs as lizards in the garden, then, when the actor sees an animal jumping in the garden, they would believe it would be a frog eight times out of nine; for every eight jumping frogs there would be one jumping lizard.

With three times as many lizards as frogs, the number of lizards jumping would go up a factor three meaning that when the actor sees an animal jumping in the garden, they would believe it is a frog only eight times out of eleven; for every eight jumping frogs there would be three jumping lizards.

The actor saw the animal jump. The probabilities for the animal being a frog and a lizard are therefore given by the vector:

$$[P(\texttt{frog} \mid \texttt{jumps}), P(\texttt{lizard} \mid \texttt{jumps})] = [\frac{8}{11}, \frac{3}{11}] \approx [0.73, 0.27]$$

meaning that the actor assigns a \(73\%\) probability to the mental state \(\texttt{frog}\) and \(27\%\) probability to the mental state \(\texttt{lizard}\). This is a major update from the prior of \(25\%\) probability of a \(\texttt{frog}\) and a \(75\%\) probability of a \(\texttt{lizard}\).

With some more math

Below follows an alternative account of the same example, this time with a little more mathematics, introducing Bayes’ theorem.

As stated above, \(p(s)\) and \(p(o \mid s)\), the prior and the likelihood together define the brain’s current model of the (very limited) world.

The probabilities assigned to the two mental states \(\texttt{frog}\) and \(\texttt{lizard}\) given the observation \(o\) are given by:

$$p(s \mid o)= \frac{p(o \mid s)p(s)}{p(o)}$$

Let’s put some numbers in the nominator (\(\odot\) indicates element-wise multiplication):

$$[P(\texttt{jumps} \mid \texttt{frog})P(\texttt{frog}), P(\texttt{jumps} \mid \texttt{lizard})P(\texttt{lizard})] = [0.8, 0.1] \odot [0.25, 0.75] = [0.2, 0.075]$$

The denominator quantifies the probability of a jumping observation. It can in this simple case, assuming that there are only two types of animals in the garden and that their jumping propensities are known, be calculated using the law of total probability:

$$P(\texttt{jumps}) = P(\texttt{jumps} \mid \texttt{frog})P(\texttt{frog}) + P(\texttt{jumps} \mid \texttt{lizard})P(\texttt{lizard}) = $$

$$0.8*0.25 + 0.1 * 0.75 = 0.275$$

The actor’s thus assigns the probabilities for the mental states \(\texttt{frog}\) and \(\texttt{lizard}\) respectively as follows:

$$[P(\texttt{frog} \mid \texttt{jumps}), P(\texttt{lizard} \mid \texttt{jumps})]= [0.2, 0.075] / 0.275 \approx [0.73, 0.27]$$

This is the same result as above. Note how the fact that there are three times as many lizards than frogs as expressed in the prior increases the probability for the jumping animal being a lizard even if lizards don’t readily jump. Bayes’ theorem sometimes gives surprising but always correct answers.

We will take a deeper look into the mathematic needed in real-world situations, outside the simple garden, in the next posts.

Links

[1] Anil Seth. Being You.

[2] Thomas Parr, Giovanni Pezzulo, Karl J. Friston. Active Inference.

[3] MIT Open Courseware. Introduction to Probability and Statistics.

[4] Ryan Smith, Karl J. Friston, Christopher J. Whyte. A step-by-step tutorial on active inference and its application to empirical data. Journal of Mathematical Psychology. Volume 107. 2022.

[5] Farnham Street Blog. The Feynman Technique: Master the Art of Learning.

[6] Youki Terada. Why Students Should Write in All Subjects. Edutopia.

[7] Donald Hoffman. The Case Against Reality.

- A generative model approximates the full joint probability distribution between input and output, in this case between observations and states, \(p(s, o)\). This in contrast to a discriminative model that is “one way”. The likelihood \(p(o \mid s)\) is a discriminative model. ↩︎

- An actor can express its desires both in terms of mental states and observations depending on the type of desire. When I write “desired observations” i mean “desired observations or desired mental states”. More on this in later posts. ↩︎